Numerical Analysis/Power iteration examples

< Numerical Analysisw:Power method is an eigenvalue algorithm which can be used to find the w:eigenvalue with the largest absolute value but in some exceptional cases, it may not numerically converge to the dominant eigenvalue and the dominant eigenvector. We should know the definition for dominant eigenvalue and eigenvector before learning some exceptional examples.

Definitions

Let

,

,  ,....

,.... be the eigenvalues of an

be the eigenvalues of an  matrix

matrix  .

.

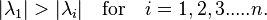

is called the dominant eigenvalue of A if

is called the dominant eigenvalue of A if

The eigenvectors corresponding to

are called dominant eigenvector of

are called dominant eigenvector of  . Next we should show some examples where the power method will not converge to the dominant eigenpair of a given matrix.

. Next we should show some examples where the power method will not converge to the dominant eigenpair of a given matrix.

Example: Bad starting guess

Use the power method to find an eigenvalue and its corresponding eigenvector of the matrix

starting with the vector

![X_0=\left[\begin{array}{c}1 \\1 \\ 1 \\\end{array} \right]](../I/m/e9c9aa94a73ad3c38f89cdab884e4948.png) .

.

We apply the power method and get

![Y_1 =A X_0 = \left[\begin{array}{c}4 \\4 \\ -4 \\\end{array} \right]](../I/m/6c8293c49a4f4f90431acae40137f816.png) ,

,

with  ,

and it implies

,

and it implies

![X_1=\left[\begin{array}{c}1 \\1 \\ -1 \\\end{array} \right]](../I/m/24d488a6dfc9baef74b288fbff99cbe8.png) .

.

In this way,

![Y_2 =AX_1 =\left[\begin{array}{c}2 \\2 \\ -2 \\\end{array} \right]](../I/m/d2368ec508a42f1f1fcd5a717db67453.png) ,

,

so

,

and it implies

,

and it implies

![X_2 =\left[\begin{array}{c}1 \\1 \\ -1 \\\end{array} \right]](../I/m/611bbaf93e86307371acdf376187c40d.png) .

.

As we can see, the sequence

converges to 2.

converges to 2.

Using the w:characteristic polynomial,

the eigenvalues of matrix  are

are

,

,

, and

, and

,

so the dominant eigenvalue is 5, and our hand calculations converged to the second largest eigenvalue.

,

so the dominant eigenvalue is 5, and our hand calculations converged to the second largest eigenvalue.

The problem is that our initial guess led us to exactly the eigenvector corresponding to  . If we run this long enough on a computer then roundoff error will eventually introduce a component of the eigenvector for

. If we run this long enough on a computer then roundoff error will eventually introduce a component of the eigenvector for  , and that will dominate.

This process can be achieved by w:Matlab. Below is the code in the matlab language. This code will plot the convergence of the sequence

, and that will dominate.

This process can be achieved by w:Matlab. Below is the code in the matlab language. This code will plot the convergence of the sequence

.

.

eest=zeros(100,1);

for i=1:100

x=A*v;

e=(x'*v)/(v'*v);

v=x/norm(x);

eest(i)=e;

end

plot(eest);

We can see the convergence of the sequence

in the following figure:

in the following figure:

We can draw the conclusion that if the starting guess we chose is very close to the corresponding eigenvector of the second largest eigenvalue, the estimation of the dominant eigenvalue will converge to the second largest eigenvalue first and dominant eigenvalue finally. Changing the starting guess will fix this problem.

Example: Complex eigenvalue

Consider the matrix

![A=\left[\begin{array}{c c c}3 & 2 & -2 \\-1 & 1 & 4 \\3 & 2 & -5 \end{array} \right]](../I/m/97cb5b016b53a3006f3aa0e95fa45534.png) .

.

Apply the power method to find the eigenvalue of the matrix with starting guess

![X_0 =\left[\begin{array}{c}1 \\1 \\ 1 \\\end{array} \right]](../I/m/e9c9aa94a73ad3c38f89cdab884e4948.png) .

.

We compute

![Y_1 =AX_0 =\left[\begin{array}{c}3 \\4 \\ 0 \\\end{array} \right]](../I/m/672bfaa9cf4b155aee2c185365709a1d.png) ,

,

thus

,

and it implies

,

and it implies

![X_1 = \left[\begin{array}{c}0.75 \\1 \\ 0 \\\end{array} \right]](../I/m/6fa632a124127c5fd52a99a4e028f5aa.png) .

.

We continue doing some iterations:

so

,

and it implies

,

and it implies

![X_2 =\left[\begin{array}{c}1 \\0.0588 \\ 1 \\\end{array} \right]](../I/m/b824b76bbee4066e3db2163518fae139.png) .

.

so

,

and it implies

,

and it implies

![X_3 =\left[\begin{array}{c}0.36537 \\1 \\ -0.615437 \\\end{array} \right]](../I/m/1e48ad4a87b0b8af90ac7197e789c5c8.png) .

.

We can see the sequence

and

and  are oscillating. The above matrix has eigenvalues 1 and

are oscillating. The above matrix has eigenvalues 1 and  and the dominant eigenvalues are w:complex conjugate to each other. The power method applied to a real matrix with a real starting guess can not work for matrices with the dominant eigenvalues which are complex conjugate to each other. Sometimes even though the sequence

and the dominant eigenvalues are w:complex conjugate to each other. The power method applied to a real matrix with a real starting guess can not work for matrices with the dominant eigenvalues which are complex conjugate to each other. Sometimes even though the sequence

and

and  can be convergent, the convergence has nothing to do with the dominant eigenpair.

we can see the convergence of the sequence

can be convergent, the convergence has nothing to do with the dominant eigenpair.

we can see the convergence of the sequence

from the figure below:

from the figure below:

As we can see, the sequence

converges to -5 which has nothing to with our dominant eigenvalues

converges to -5 which has nothing to with our dominant eigenvalues  and the power method will not work if the matrix has dominant eigenvalues which are complex conjugate to each other and our starting guess has all real entries. When we change our starting guess to the vectors which have complex entries, the power method should work as usual.

and the power method will not work if the matrix has dominant eigenvalues which are complex conjugate to each other and our starting guess has all real entries. When we change our starting guess to the vectors which have complex entries, the power method should work as usual.

Reference

Power method for approximating eigenvalues

http://ceee.rice.edu/Books/LA/eigen/

Deri Prasad, An Introduction to Numerical Analysis, Third Edition,P7.2-7.18

![A=\left[\begin{array}{c c c}1 & 2 & 1 \\-4 & 7 & 1 \\-1 & -2 & -1 \end{array} \right]](../I/m/2cd5ef2d16e39f547209762de01e15ad.png)

![AX_1 =\left[\begin{array}{c}4.25 \\0.25 \\ 4.25 \\\end{array} \right]](../I/m/a9b2cd18da019aae0edaef8a10987d6e.png)

![AX_2 =\left[\begin{array}{c}1.1176 \\3.0588 \\ -1.8824 \\\end{array} \right]](../I/m/f6eb498fb2db010fa46aea88055125f7.png)