The bisection method

Numerical analysis > The bisection method

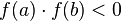

The bisection method is based on the theorem of existence of roots for continuous functions, which guarantese the existence of at least one root  of the function

of the function  in the interval

in the interval ![[a, b]](../I/m/2c3d331bc98b44e71cb2aae9edadca7e.png) if

if  and

and  have opposite sign. If in

have opposite sign. If in ![[a,b]](../I/m/2c3d331bc98b44e71cb2aae9edadca7e.png) the function

the function  is also monotone, that is

is also monotone, that is ![f'(x)>0\;\forall x\in [a,b]](../I/m/977de42f8a9051c595523f1c25761c1f.png) , then the root of the function is unique.

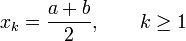

Once established the existence of the solution, the algorithm defines a sequence

, then the root of the function is unique.

Once established the existence of the solution, the algorithm defines a sequence  as the sequence of the mid-points of the intervals of decreasing width which satisfy the hypotesis of the roots theorem.

as the sequence of the mid-points of the intervals of decreasing width which satisfy the hypotesis of the roots theorem.

Roots Theorem

The theorema of existence of roots for continuous function (or Bolzano's theorem) states

Let ![f:[a,b] \to \mathbb{R}](../I/m/332d0f0f6103ad3c4ec22d3af5b36e7c.png) be a continuous function such that

be a continuous function such that  .

.

Then there exist at least one point  in the open interval

in the open interval  such that

such that  .

.

The proof can be found here .

Bisection algorithm

Given ![f \in C^0([a,b])](../I/m/02eb4cae5ae7385fcd2f3597e52652b2.png) such that the hypothesis of the roots theorem are satisfied and given a tolerance

such that the hypothesis of the roots theorem are satisfied and given a tolerance

-

;

; - if

esci;

esci; - if

break;

break;

- else if

;

; - else

;

;

- else if

- go to step 1;

In the first step we define the new value of the sequence: the new mid-point. In the second step we do a control on the tolerance: if the error is less than the given tolerance we accept  as a root of

as a root of  . The third step consists in the evaluation of the function in

. The third step consists in the evaluation of the function in  : if

: if  we have found the solution; else ,since we divided the interval in two, we need to find out on which side is the root. To this aim we use the hypotesis of the roots theorem, that is, we seek the new interval such that the function has opposite signs at the boundaries and we re-define the interval moving

we have found the solution; else ,since we divided the interval in two, we need to find out on which side is the root. To this aim we use the hypotesis of the roots theorem, that is, we seek the new interval such that the function has opposite signs at the boundaries and we re-define the interval moving  or

or  in

in  . Eventually, if we have not yet found a good approximation of the solution, we go back to the starting point.

. Eventually, if we have not yet found a good approximation of the solution, we go back to the starting point.

convergence of bisection method and then the root of convergence of f(x)=0in this method

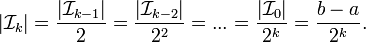

At each iteration the interval ![\mathcal{I}_k=[a_k, b_k]](../I/m/5b821669d8d9a5ecd477fcdaaedad627.png) is divided into halves, where with

is divided into halves, where with  and

and  we indicate the extrema of the interval at iteration

we indicate the extrema of the interval at iteration  . Obviously

. Obviously ![\mathcal{I}_0=[a,b]](../I/m/5c58410f2d106235511beac817793421.png) .

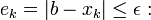

We indicate with

.

We indicate with  the length of the interval

the length of the interval  . In particular we have

. In particular we have

Note that  , that means

, that means

.

.

From this we have that  , since

, since  . For this reason we obtain

. For this reason we obtain

,

,

which proves the global convergence of the method.

The convergence of the bisection method is very slow. Although the error, in general, does not decrease monotonically, the average rate of convergence is 1/2 and so, slightly changing the definition of order of convergence, it is possible to say that the method converges linearly with rate 1/2.

Don't get confused by the fact that, on some books or other references, sometimes, the error is written as  . This is due to the fact that the sequence is defined for

. This is due to the fact that the sequence is defined for  instead of

instead of  .

.

Example

Consider the function  in

in ![\displaystyle [0, 3\pi]](../I/m/19c50b13f287abaf0ed8a9b79113d030.png) . In this interval the function has 3 roots:

. In this interval the function has 3 roots:  ,

,  and

and  .

.

Theoretically the bisection method converges with only one iteration to  . In practice, nonetheless, the method converges to

. In practice, nonetheless, the method converges to  or to

or to  . In fact, since the finite representation of real numbers on the calculator,

. In fact, since the finite representation of real numbers on the calculator,  and depending on the approximation of the calculator

and depending on the approximation of the calculator  could be positive or negative, but never zero. In this way the bisection algorithm, in this case, is excluding automatically the root

could be positive or negative, but never zero. In this way the bisection algorithm, in this case, is excluding automatically the root  at the first iteration, since the error is still large (

at the first iteration, since the error is still large ( ).

).

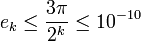

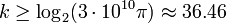

Suppose that the algorithm converges to  and let's see how many iterations are required to satisfy the relation

and let's see how many iterations are required to satisfy the relation  . In practice, we need to impose

. In practice, we need to impose

,

,

and so, solving this inequality, we have

,

,

and, since  is a natural number, we find

is a natural number, we find  .

.

References

- Atkinson, Kendall E. (1978, 1989). "chapter 2.1". An Introduction To Numerical Analysis (2nd ed.).

- Quarteroni, Alfio; Sacco, Riccardo; Fausto, Saleri (2007). "chapter 6.2". Numerical Mathematics (2nd ed.).

- Süli, Endre; Mayers, David F (2003). "chapter 1.6". An introduction to numerical analysis (1st ed.).

Other resources on the bisection method

Look on the resources about rootfinding for nonlinear equations page.