Introduction to differentiation

Resources

Wikibooks entry for Differentiation

Prelude

Arithmetic is about what you can do with numbers. Algebra is about what you can do with variables. Calculus is about what you can do with functions. Just as in arithmetic there are things you can do to a number to give another number, such as square it or add it to another number, in calculus there are two basic operations that given a function yield new and intimately related functions. The first of these operations is called differentiation, and the new function is called the derivative of the original function.

This set of notes deals with the fundamentals of differentiation. For information about the second functional operator of calculus, visit Integration by Substitution after completing this unit.

Before we dive in, we will warm up with an excursion into the mathematical workings of interest in banking.

Compound Interest

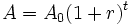

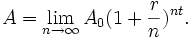

Let us suppose that we deposit an amount  in the bank on New Year's Day, and furthermore that every year on the year the amount is augmented by a rate

in the bank on New Year's Day, and furthermore that every year on the year the amount is augmented by a rate  times the present amount. Then the amount

times the present amount. Then the amount  in the bank on any given New Year's Day,

in the bank on any given New Year's Day,  years after the first is given by the expression

years after the first is given by the expression

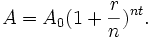

.

.

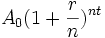

Unfortunately, if we withdraw the money three days before the New Year, we don't get any of the interest payment for that year. A fairer system would involve calculating interest  times a year at the rate

times a year at the rate  . In fact this gives us a slightly different value even if we take our money out on a New Year's Day, because every time we calculate interest, we receive interest on our previous interest. The amount

. In fact this gives us a slightly different value even if we take our money out on a New Year's Day, because every time we calculate interest, we receive interest on our previous interest. The amount  we receive with this improved system is given by the expression

we receive with this improved system is given by the expression

With this flexible system, we could set  to

to  to compound every month, or to

to compound every month, or to  to compound every day or to about

to compound every day or to about  to compound every second. But why stop there? Why not compound the interest every moment? What is really meant by that is this: as we increase

to compound every second. But why stop there? Why not compound the interest every moment? What is really meant by that is this: as we increase  does the value for

does the value for  get ever greater with

get ever greater with  or does it approach some reasonable quantity? If the latter is the case, then it is meaningful to ask, "What does

or does it approach some reasonable quantity? If the latter is the case, then it is meaningful to ask, "What does  approach?" As we can see from the following table with sample values, this is in fact the case.

approach?" As we can see from the following table with sample values, this is in fact the case.

1 1.02500 12 1.02529 365 1.02531 31536000 1.02532 100000000 1.02532  ,

,  ,

,

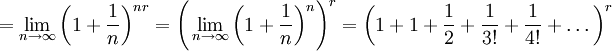

As we can see, as  goes off toward infinity,

goes off toward infinity,  approaches a finite value. Taking this to heart, we may come to our final system in which we define

approaches a finite value. Taking this to heart, we may come to our final system in which we define  as follows:

as follows:

Thus we set  now not to

now not to  evaluated for some large

evaluated for some large  , but rather to the limit of that value as

, but rather to the limit of that value as  approaches infinity. This is the formula for continually compounded interest. To clean up this formula, note that neither

approaches infinity. This is the formula for continually compounded interest. To clean up this formula, note that neither  nor

nor  "interfere" in any way with the evaluation of the limit, and may consequently be moved outside of the limit without affecting the value of the expression:

"interfere" in any way with the evaluation of the limit, and may consequently be moved outside of the limit without affecting the value of the expression:

,

,

where

We can see from the form of the expression that  increases exponentially with

increases exponentially with  much as it did in our very first equation. The difference is that the original base

much as it did in our very first equation. The difference is that the original base  has been replaced with the base

has been replaced with the base  which we have yet to simplify.

which we have yet to simplify.

Take a moment to step back and do the following exercises:

- Without looking back, see if you can write down the expressions that represent

- yearly interest

- semiannual interest

- monthly interest

- interest

times a year

times a year - continually compounded interest

- Think about how much money you have. Figure out how long you would have to leave your money in a bank that compounds interest monthly before you became a millionaire, with a yearly interest rate of

- .02 (common for a savings account)

- .07 (average gain in the US stock market over a reasonably long period).

Finding the Base

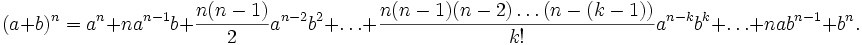

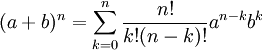

In order to shed some light on the expression whose value we call  , we shall make use of the following expansion, known as the Binomial Theorem:

, we shall make use of the following expansion, known as the Binomial Theorem:

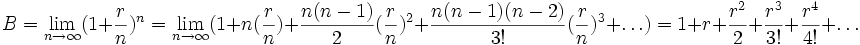

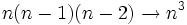

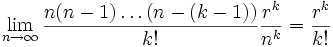

By applying it to our limit, we get

.

.

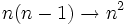

This last step may seem mystifying at first. What happened to the limit? And where did all of the  's go? In fact it was the evaluation of the limit that allowed us to remove the

's go? In fact it was the evaluation of the limit that allowed us to remove the  's. More exactly, as

's. More exactly, as  , so too

, so too  ,

,  , etc., so that the top left and bottom right of each term cancel to produce the last expression.

, etc., so that the top left and bottom right of each term cancel to produce the last expression.

Take a moment to look over the following exercises. Take the time to follow the trains of thought that are newest to you.

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

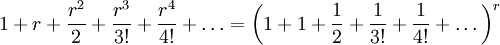

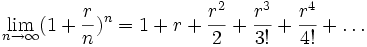

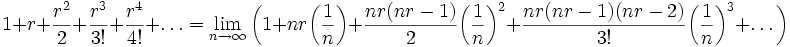

The Birth of

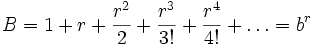

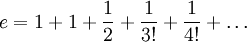

Now comes a real surprise. As it turns out, the infinite polynomial above is in fact exponential in  . That is,

. That is,  , for some

, for some  . In order to show this far-from-obvious fact, I offer the following.

. In order to show this far-from-obvious fact, I offer the following.

To this last infinite series of numbers, define the quantity to be  :

:

.

.

, an irrational (and in fact transcendental) number, has the approximate value 2.71828, which you may easily verify on a standard pocket or graphing calculator.

, an irrational (and in fact transcendental) number, has the approximate value 2.71828, which you may easily verify on a standard pocket or graphing calculator.

There are a few things to think about.

- The first line in the preceding derivation was motivated by my knowledge of the outcome.

- Convince yourself that the two expressions are in fact equal to one another.

- Evaluate the term

for

for  and

and  . How does that compare to

. How does that compare to  ? How about with

? How about with  ?

?

- Evaluate the term

- Now that you have convinced yourself that I may do it, ask yourself why I would do it.

- Using the reverse Binomial Theorem, do you understand how it leads to the next expression?

- Convince yourself that the two expressions are in fact equal to one another.

- Is the equation

something that one would predict merely from the rules of exponents or distribution?

something that one would predict merely from the rules of exponents or distribution? - What makes certain seemingly uninteresting numbers so profoundly central to mathematics, such as

,

,  ,

,  , and

, and  ?

?

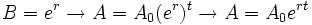

Back to the Start

From here, everything cascades back to our original goal, namely to find a usable formula for continually compounded interest.

.

And there she is.

.

And there she is.

Take a moment to do the following exercises.

- Think about how much money you have. How long will it take to become millionaire if you leave the money in a bank with yearly interest of .025

- that compounds interest yearly?

- that compounds interest continually?

- Seeing as the values with and without continually compounded interest are very close to one another, what does that tell you about the two equations used?

- Both formulas are of the form

_____

_____ . Compare the various values that we have put in this blank, especially the in the equations for yearly and continually compounded interest.

. Compare the various values that we have put in this blank, especially the in the equations for yearly and continually compounded interest. - How close in value is

to

to  ? Does that surprise you?

? Does that surprise you? - Now look at the infinite series version of the function

. Does it still surprise you that

. Does it still surprise you that  and

and  are so close in value?

are so close in value?

- Both formulas are of the form

Commentary

The formula itself, however, is quite forgettable. In fact, as you may have guessed, the importance of compounded interest pales in comparison to the importance of the ideas we stumbled upon on the way, namely limits and  . It is these two things that beg for us to go further into the heart of the life and being of functions. That wish is called calculus. And it all starts rather innocently with the derivative…

. It is these two things that beg for us to go further into the heart of the life and being of functions. That wish is called calculus. And it all starts rather innocently with the derivative…

Notion of secant, slope,

The slope of a curve is most usefully approached by considering the simplest curve, the straight line. So, imagine a line plotted on square graph paper, of the kind familiar to just about every schoolchild. What can you say about such a line? We suppose for our discussion here that the line goes off your sheet of paper on both sides, and keeps going forever. Take your page and look at it. A line might be flat, that is parallel to the bottom of the page. It might be vertical, parallel to the sides of the page, or it might lie between these two extremes, not as flat as the first, and not as steep as the second.

The first part of the idea of 'slope' is that of steepness. How steep is the line, taking the horizontal line and a vertical line as our two extremes?

Our flat horizontal line has a slope of zero - nothing happens to the y's whatever you do to the x's, think of cycling in parts of the Netherlands for example.

A line at 45 degrees to the horizontal (that is exactly half way between vertical (90 degrees) and horizontal (0 degrees) has a slope of 1 (this would be a brutal to impossible hill for a bicycle, and very tough on foot). As it goes across one unit, it also goes up (or down) one unit.

Our vertical line is more interesting, if harder to cycle on. The slope is not defined, and as our line gets closer and closer to vertical, the slope gets bigger and bigger without limit.

The second part of the idea of slope captures something slightly different. It captures the idea of direction. Look at your line again. As it goes to your right does it go up the page, or down the page? If it was a road going up a hill would it be hard to follow it on a bicycle(going up), or very easy (going down)? This is expressed in the slope of a line by saying that a line has a positive slope if, as it goes across, it also goes up, (or as the y's increase the x's increase too). A line is said to have a negative slope if it goes down as it goes across, (or as the y's increase the x's decrease). As a cyclist you want a negative slope, unless you're in training.

The Derivative

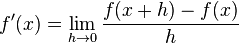

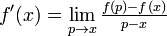

Definition

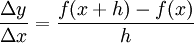

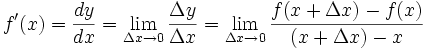

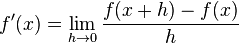

Given a function  , we define the derivative

, we define the derivative  to be

to be

.

.

This definition is motivated by the proportion  , which for any h defines the slope of a line, when f is linear. Because of the nature of the calculation, the derivative can be figuratively thought of as the ratio between an infinitesimal dy and an infinitesimal dx and is often written

, which for any h defines the slope of a line, when f is linear. Because of the nature of the calculation, the derivative can be figuratively thought of as the ratio between an infinitesimal dy and an infinitesimal dx and is often written  . Both functional notation

. Both functional notation  and infinitesimal or Leibniz notation

and infinitesimal or Leibniz notation  have their virtues. In operator theory, the derivative of a function

have their virtues. In operator theory, the derivative of a function  is sometimes written as

is sometimes written as  .

.

- Using the definition above, what is

?

?

- Note that this is a short way of asking, if

, what is

, what is  ? One may also ask, what is

? One may also ask, what is ![[x^2]'](../I/m/ba28e240f2ee680ff32949e3307df40f.png) ?

?

- Note that this is a short way of asking, if

- If you have trouble remembering the definition of the derivative, it's much more important to know what it means, that is, why it's defined how it is. Remember it like this:

.

.- From this we get the definition as stated above,

.

.

- What kinds of functions have derivatives? What would a function need to have, for it not to have a derivative at some point?

Properties

The derivative satisfies a number of fundamental properties

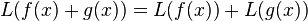

Linearity

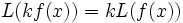

An operator  is called linear if

is called linear if  and

and  for any constant

for any constant  . To show that differentiation is a linear operator, we must show that

. To show that differentiation is a linear operator, we must show that

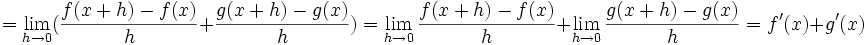

![[f(x)+g(x)]'=f'(x)+g'(x)](../I/m/42d4692b5412eda2775446cd6ee1cf4b.png) and

and ![[kf(x)]'=kf'(x)](../I/m/346ae8b24ae37ca8f84089d4e085c5a5.png) for any constant

for any constant  .

.

.

.

In other words, the differential operator (e.g.,  ) distributes over addition.

) distributes over addition.

![[kf(x)]'=\lim_{h\rightarrow 0}\frac{kf(x+h)-kf(x)}{h}=k\lim_{h\rightarrow 0}\frac{f(x+h)-f(x)}{h}=kf'(x)](../I/m/0fcbd5cfc60526c2495a6425d10fdfd1.png) .

.

In other words, addition before and after doing differentiation are equivalent.

Fundamental Rules of Differentiation

Along with linearity, which is so simple that one hardly thinks of it as a rule, the following are essential to finding the derivative of arbitrary functions.

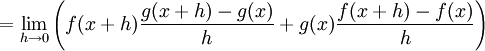

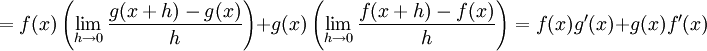

The Product Rule

It may be shown that for functions f and g, ![[f(x)g(x)]'=f(x)g'(x) + f'(x)g(x)](../I/m/e0110ecd5a91efb6a405dea79c1e3219.png) . Like the other two rules, this one is not a new axiom: it is directly provable from the definition of the derivative.

. Like the other two rules, this one is not a new axiom: it is directly provable from the definition of the derivative.

![[f(x)g(x)]' = \lim_{h\rightarrow 0} \frac{f(x+h)g(x+h) - f(x)g(x)}{h}

= \lim_{h\rightarrow 0} \frac{f(x+h)g(x+h) - f(x+h)g(x) + f(x+h)g(x) - f(x)g(x)}{h}](../I/m/e9232d7d99f5779e1728f3b945c5e0cf.png)

.

.

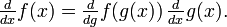

Chain Rule

If a function f(x) can be written as a compound function f(g(x)), one can obtain its derivative using the chain rule. The chain rule states that the derivative of f(x) will equal the derivative of f(g) with respect to g, multiplied by the derivative of g(x) with respect to x. In mathematical terms:

![[ \, f(g(x)) \, ]'=f'(g(x))g'(x).](../I/m/4f354a1033c3d28822b0f9be63d51610.png) This is commonly written as

This is commonly written as  , or more explicitly

, or more explicitly

The proof makes use of an alternate but patently equivalent definition of the derivative:

.

The first step is to write the derivative of the compound function in this form; one then manipulates it and obtains the chain rule.

.

The first step is to write the derivative of the compound function in this form; one then manipulates it and obtains the chain rule.

![\begin{align}

\left[f(g(x))\right]' & = \lim_{p\rightarrow x}\frac{f(g(p))-f(g(x))}{p-x} \\

& = \lim_{p\rightarrow x} \frac{f(g(p))-f(g(x))}{g(p)-g(x)} \; \frac{g(p)-g(x)}{p-x} \\

& = \lim_{g(p)\rightarrow g(x)} \frac{f(g(p))-f(g(x))}{g(p)-g(x)} \; \lim_{p\rightarrow x} \frac{g(p)-g(x)}{p-x} \\

& = f'(g(x)) g'(x).

\end{align}](../I/m/0760d129459e87c3b3398caf8042176a.png)

In the third step, the first limit changes from p→x to g(p)→g(x). This is valid because if g is continuous at x, which it must be to have a derivative at x, then of course as p approaches x the value of g(p) approaches that of g(x).

Differentiating a nested function occurs very frequently, which makes this rule very useful.

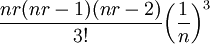

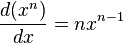

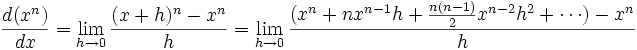

The Power Rule

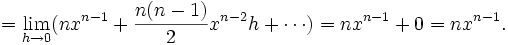

We may now readily show the relation  as follows:

as follows:

While this derivation assumes that  is an positive integer, it turns out that the same rule holds for all real

is an positive integer, it turns out that the same rule holds for all real  . For example,

. For example,

![\frac{d}{dx}[\frac{1}{x}]=\frac{d(x^{-1})}{dx}=(-1)x^{-2}=-\frac{1}{x^2}](../I/m/7db032487ff69ed7f155dbc41b58eac0.png) .

.

Take a moment to do the following exercises.

- Using the

rule and linearity, find the derivatives of the following:

rule and linearity, find the derivatives of the following:

- What functions have the following derivatives?

Exponentials and logarithms

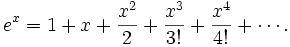

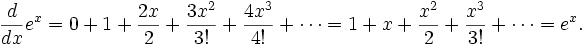

Exponentials and logarithms involve a special number denoted e.

Differentiating

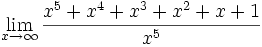

Now, recall that

Using the three basic rules established above we can differentiate any polynomial, even one of infinite degree:

is the remarkable function that is its own derivative. In other words,

is the remarkable function that is its own derivative. In other words,  is an eigenfunction of the differential operator. Which means that the application of the differential operator on

is an eigenfunction of the differential operator. Which means that the application of the differential operator on  has the same effect as multiplication by a real number. For example, these concepts are useful in quantum mechanics.

has the same effect as multiplication by a real number. For example, these concepts are useful in quantum mechanics.

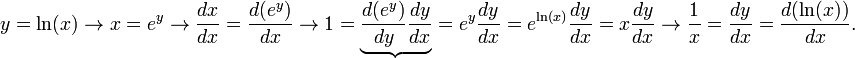

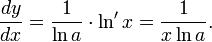

Differentiating

The natural logarithm is the function such that if  then

then  ; in other words, it is the inverse function of

; in other words, it is the inverse function of  . We will make use of the chain rule (marked by the brace) in order to find its derivative:

. We will make use of the chain rule (marked by the brace) in order to find its derivative:

This conclusion, that the derivative of  is

is  , is remarkable: it ties together two seemingly unrelated functions. Be careful, this derivative has definite values only when x > 0! (Examine the

, is remarkable: it ties together two seemingly unrelated functions. Be careful, this derivative has definite values only when x > 0! (Examine the  to understand why.)

to understand why.)

Differentiating functions which are not immediately related to base

Exponentials

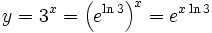

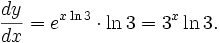

Supppose we have the function

To differentiate this, we rewrite this as

Since  is a constant,

is a constant,

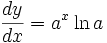

In other words, for a constant a, we have

whenever

whenever

This re-enforces the special place that  has in calculus - it is the unique number for which the constant

has in calculus - it is the unique number for which the constant  is precisely equal to one.

is precisely equal to one.

Logarithms

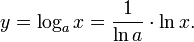

Let us differentiate the function

We already know how to differentiate  , so let's change it into another form with the base e.

, so let's change it into another form with the base e.

Because  is a constant,

is a constant,

In conclusion, for any constant a, the derivative of  is

is

Implicit Differentiation

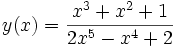

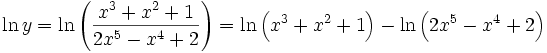

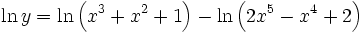

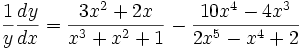

Let's suppose that

One could find  with the quotient rule, but for more complicated functions, it may be better to use what is called "implicit differentiation".

with the quotient rule, but for more complicated functions, it may be better to use what is called "implicit differentiation".

In this case, we take the logarithm of both sides, to obtain

or, in other words, just simply

Differentiating the left and right hand side, we get

Now, multiply both sides by y, which we know is just  to obtain the answer:

to obtain the answer:

which of course can be simplified further. You should verify that this result agrees with the quotient rule. Differentials of logarithms of functions occur frequently in places like statistical mechanics.

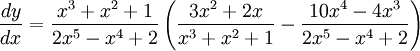

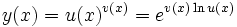

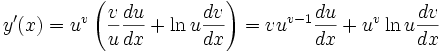

General exponentials and logarithms

Consider the function

It can be immediately seen that

Compare this result to the chain rule and power rule results. The first term results in treating v constant. The second term results in treating u constant.

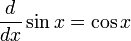

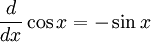

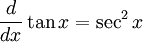

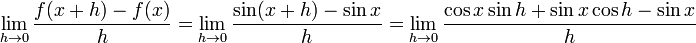

Trigonometric functions

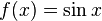

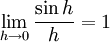

Consider the function  . To find the derivative of

. To find the derivative of  , we use the definition of the derivative, as well as some trigonometric identities and the linearity of the limit operator.

, we use the definition of the derivative, as well as some trigonometric identities and the linearity of the limit operator.

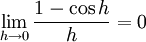

and since  and

and  , the above expression simplifies to

, the above expression simplifies to  .

.

Thus, the derivative of  is

is  .

.

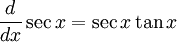

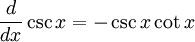

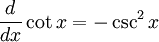

We perform the same process to find the derivatives of the other trigonometric functions (try to derive them on your own as an exercise). Since these derivatives come up quite often, it would behoove (advantageous to) you to memorize them.

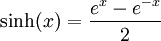

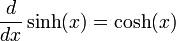

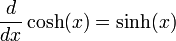

Hyperbolic functions

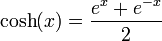

The rules for differentiation involving hyperbolic functions behave very much like their trigonometric counterparts. Here,

so it can be seen that

and

?

? or

or  ?

? . Is this the same as the formula we used?

. Is this the same as the formula we used? . Does this make sense based on what you know about limits?

. Does this make sense based on what you know about limits?

?

? ?

?

![[f(x)+g(x)]'=\lim_{h\rightarrow 0}\frac{(f(x+h)+g(x+h))-(f(x)+g(x))}{h}](../I/m/3628210b46a149249b6277634198b664.png)

![=\lim_{h \to 0} \cos x \dfrac{\sin h}{h} - \sin x \dfrac{1 - \cos h}{h} = \cos x\left[\lim_{h \to 0} \dfrac{\sin h}{h}\right] - \sin x \left[\lim_{h \to 0}\dfrac{1 - \cos h}{h}\right],](../I/m/eaa1c064b3fe451e9de94b8582f6e992.png)