Calculus/Limits

< CalculusIntroducing Limits

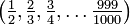

Let's consider the following expression:

As n gets larger and larger, the fraction gets closer and closer to 1.

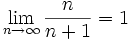

As n approaches infinity, the expression will evaluate to fractions where the difference between them and 1 becomes negligible. The expression itself approaches 1. As mathematicians would say, the limit of the expression as  goes to infinity is 1, or in symbols:

goes to infinity is 1, or in symbols:  .

.

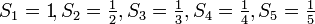

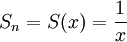

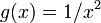

An interesting sequence is

As

As  gets bigger (in symbols

gets bigger (in symbols  , we have smaller values of

, we have smaller values of  , for

, for

and so on. Clearly,  can't be smaller than zero (for if

can't be smaller than zero (for if  we have that

we have that  is less than zero).

Then we may say that

is less than zero).

Then we may say that  .

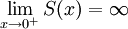

Continuing with this sequence, we might want to study what happens when

.

Continuing with this sequence, we might want to study what happens when  gets near to zero, and later what happens with negative values of

gets near to zero, and later what happens with negative values of  going near to zero. Usually, the letter

going near to zero. Usually, the letter  is reserved for integer values, so we are going to redefine our sequence as

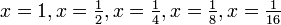

is reserved for integer values, so we are going to redefine our sequence as  . If we take a sequence of values of

. If we take a sequence of values of  , say

, say

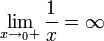

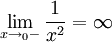

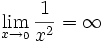

We see that the respective values of  grows indefinitely, for

grows indefinitely, for

In this case, we might say that the limit of  , in words, the limit of

, in words, the limit of  as

as  goes to zero from right (as the sequence of values of

goes to zero from right (as the sequence of values of  goes to zero from the left in a graphic) diverge (or tends to infinity, or is unbounded, but we never say that it is infinity or equals infinity).

Other case happens if we study sequences of values of

goes to zero from the left in a graphic) diverge (or tends to infinity, or is unbounded, but we never say that it is infinity or equals infinity).

Other case happens if we study sequences of values of  such that every element of the sequence is lower than zero, the sequence is increasing but never exceeding zero. One example of such sequence is:

such that every element of the sequence is lower than zero, the sequence is increasing but never exceeding zero. One example of such sequence is:

, with

, with

, with

, with

, with

, with

, with

, with

, with

, with

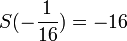

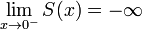

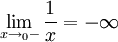

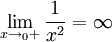

The values of  decrease without bounds. The we say that

decrease without bounds. The we say that  , or that

, or that  tends to minus infinity when

tends to minus infinity when  goes to zero from left.

goes to zero from left.

Basic Limits

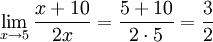

For some limits (if the function is continuous at and near the limit), the variable can be replaced with its value directly:

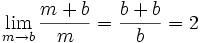

For example,

and

(with

(with  not equal to 0)

not equal to 0)

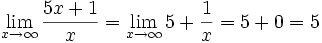

Others are somewhat more complicated:

Note that in this limit, one may not immediately set  equal to

equal to  because this would result in the expression evaluating to

because this would result in the expression evaluating to

which is an undefined expression. However, one may reduce the expression by separating the terms into separate fractions (in this case,  and

and  ), which can be evaluated directly.

), which can be evaluated directly.

Right and Left Hand Limits

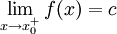

Sometimes, we want to calculate the limit of a function as a variable approaches a certain value from only one side; that is, from the left or right side. This is denoted, respectively, by  or

or  .

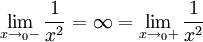

If the left-hand and right-hand limits do not both exist, or are not equal to each other, then the limit does not exist.

.

If the left-hand and right-hand limits do not both exist, or are not equal to each other, then the limit does not exist.

The following limit does not exist:

It doesn't because the left and right handed limits are unequal.

Note that if the function is undefined at the point where we are trying to find the limit, it doesn't mean that the limit of the function at that point does not exist; for an example, let's evaluate  at

at  .

.

| Left-hand limit | Right-hand limit |

|---|---|

|

|

Therefore:

Some Formal Definitions and Properties

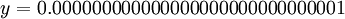

Until now, limits have been discussed informally but it shouldn't be all intuition, for we need to be sure of certain assertions. For an example, the limit

We have seen that the function decreases as  increases, but how do we guarantee that there isn't a value

increases, but how do we guarantee that there isn't a value  , say

, say

such that  is never smaller than

is never smaller than  ? If there is such

? If there is such  , we might want to say that the limit is

, we might want to say that the limit is  , not zero, and we can't test every single possible value of

, not zero, and we can't test every single possible value of  (for there are infinite possibilities). We must then find a mathematical way of proving that there isn't such

(for there are infinite possibilities). We must then find a mathematical way of proving that there isn't such  , but for that we need to define formally what a limit is.

, but for that we need to define formally what a limit is.

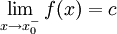

Right Limits, Left Limits, Limits and Continuity

Let  be a real valued function. We say that

be a real valued function. We say that

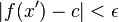

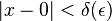

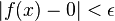

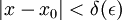

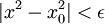

if for every  there is a

there is a  such that, for every

such that, for every  between

between  and

and

where  is the absolute value of

is the absolute value of  .

.

This is the formal definition of convergence from the left. It means that for each possible error bigger than zero, we are able to find a interval such that for all  in that interval, the distance between the value of the function and the constant

in that interval, the distance between the value of the function and the constant  is less than the error.

is less than the error.

TODO: Graphics illustrating this.

In an analogous fashion, we say that

if for every  there is a

there is a  such that, for every

such that, for every  between

between  and

and  ,

,  .

.

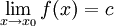

And to finish the necessary definitions,

if

and

.

.

Example:

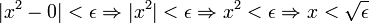

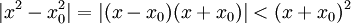

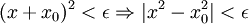

This is a assertion that must be proved. First, lets study the behavior of  near zero;

near zero;

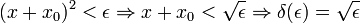

where the arrow pointing right means implies. So, define the function

If  , then

, then  . We have show how to find the delta in the definition of limit, showing that the limit of

. We have show how to find the delta in the definition of limit, showing that the limit of  as x tends to zero is zero.

as x tends to zero is zero.

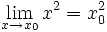

In fact, for any real number  ,

,

Lets see how to construct a suitable function  .

.

, then

, then

So,

implying that  makes

makes  for any

for any  .

.

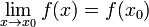

Functions with the property that

are called continuous, and arise very naturally in the physical sciences; Beware that, against the intuition of most people, not every function is continuous.

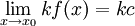

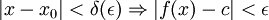

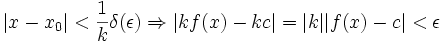

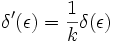

Property of Limits

Property one: If  , then

, then

for any constant  .

.

Proof: Construct the function  for

for  . Then

. Then

So

Then the limit of  is

is  , for the delta function of

, for the delta function of  is

is

QED.

TODO: Demonstrate main properties of limit (unicity, etc)

L'Hôpital's Rule

L'Hôpital's Rule is used when a limit approaches an indeterminate form. The two main indeterminate forms are  and

and  . Other indeterminate forms can be algebrically manipulated, such as

. Other indeterminate forms can be algebrically manipulated, such as  .

.

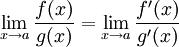

L'Hôpital's Rule states if a limit of  approaches an intederminate form as

approaches an intederminate form as  approaches

approaches  , then:

, then:

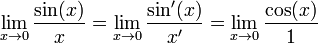

Example:

Both the numerator and the denominator approach zero as  approaches zero, therefore the limit is in indeterminate form, so l'Hôpital's rule can be applied to this limit. (note: you can also use the Sandwich Theorem.)

approaches zero, therefore the limit is in indeterminate form, so l'Hôpital's rule can be applied to this limit. (note: you can also use the Sandwich Theorem.)

Now the limit is in a usable form, which equals 1.

If the limit resulting from applying l'Hôpital's Rule is still one of the two mentioned indeterminates, we may apply the rule again (to the limit obtained), and again and again until a usable form is encountered.

Where does it come from

To obtain l'Hôpital's Rule for a limit of  which approaches

which approaches  as

as  approaches

approaches  , we simply decompose both

, we simply decompose both  and

and  in terms of their Taylor expansion (centered around

in terms of their Taylor expansion (centered around  ). The independent terms of both expansions must be

). The independent terms of both expansions must be  (because both

(because both  and

and  approached

approached  ), so if we divide both the

), so if we divide both the  and

and  by

by  (or, equivalently, find their derivatives), our limit will stop being indeterminate.

(or, equivalently, find their derivatives), our limit will stop being indeterminate.

It could be the case that the Taylor expansions of both the numerator and the denominator have a  as coefficient of the

as coefficient of the  term, thus yielding an indeterminate. This is the same case mentioned above where the trick was to repeat the process until a suitable limit was found.

term, thus yielding an indeterminate. This is the same case mentioned above where the trick was to repeat the process until a suitable limit was found.

The case of a limit which approaches  can be transformed to the case above by exchanging

can be transformed to the case above by exchanging  with

with  , which obviously approaches

, which obviously approaches  .

.