Applied linear operators and spectral methods/Lecture 4

< Applied linear operators and spectral methodsMore on spectral decompositions

In the course of the previous lecture we essentially proved the following theorem:

Theorem:

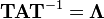

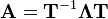

1) If a  matrix

matrix  has

has  linearly independent real or complex

eigenvectors, the

linearly independent real or complex

eigenvectors, the  can be diagonalized.

2) If

can be diagonalized.

2) If  is a matrix whose columns are eigenvectors then

is a matrix whose columns are eigenvectors then  is the diagonal matrix of eigenvalues.

is the diagonal matrix of eigenvalues.

The factorization  is called the spectral representation

of

is called the spectral representation

of  .

.

Application

We can use the spectral representation to solve a system of linear homogeneous ordinary differential equations.

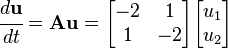

For example, we could wish to solve the system

(More generally  could be a

could be a  matrix.)

matrix.)

Comment:

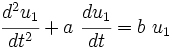

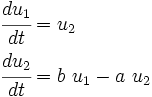

Higher order ordinary differential equations can be reduced to this form. For example,

Introduce

Then the system of equations is

or,

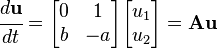

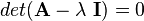

Returning to the original problem, let us find the eigenvalues and eigenvectors of

. The characteristic equation is

. The characteristic equation is

o we can calculate the eigenvalues as

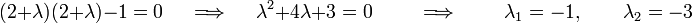

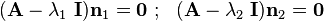

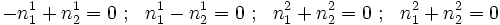

The eigenvectors are given by

or,

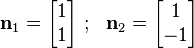

Possible choices of  and

and  are

are

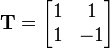

The matrix  is one whose columd are the eigenvectors of

is one whose columd are the eigenvectors of  , i.e.,

, i.e.,

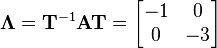

and

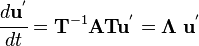

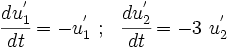

If  the system of equations becomes

the system of equations becomes

Expanded out

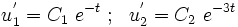

The solutions of these equations are

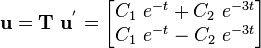

Therefore,

This is the solution of the system of ODEs that we seek.

Most "generic" matrices have linearly independent eigenvectors. Generally a matrix will

have  distinct eigenvalues unless there are symmetries that lead to repeated values.

distinct eigenvalues unless there are symmetries that lead to repeated values.

Theorem

If  has

has  distinct eigenvalues then it has

distinct eigenvalues then it has  linearly independent eigenvectors.

linearly independent eigenvectors.

Proof:

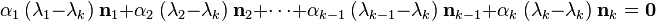

We prove this by induction.

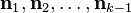

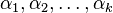

Let  be the eigenvector corresponding to the eigenvalue

be the eigenvector corresponding to the eigenvalue  . Suppose

. Suppose

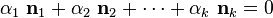

are linearly independent (note that this is true for

are linearly independent (note that this is true for

= 2). The question then becomes: Do there exist

= 2). The question then becomes: Do there exist  not all zero such that the linear combination

not all zero such that the linear combination

Let us multiply the above by  . Then, since

. Then, since

, we have

, we have

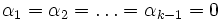

Since  is arbitrary, the above is true only when

is arbitrary, the above is true only when

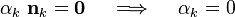

In thast case we must have

This leads to a contradiction.

Therefore  are linearly independent.

are linearly independent.

Another important class of matrices which are diagonalizable are those which are self-adjoint.

Theorem

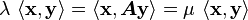

If  is self-adjoint the following statements are true

is self-adjoint the following statements are true

is real for all

is real for all  .

.- All eigenvalues are real.

- Eigenvectors of distinct eigenvalues are orthogonal.

- There is an orthonormal basis formed by the eigenvectors.

- The matrix

can be diagonalized (this is a consequence of the previous statement.)

can be diagonalized (this is a consequence of the previous statement.)

Proof

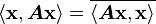

1) Because the matrix is self-adjoint we have

From the property of the inner product we have

Therefore,

which implies that  is real.

is real.

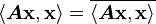

2) Since  is real,

is real,  is real.

Also, from the eiegnevalue problem, we have

is real.

Also, from the eiegnevalue problem, we have

Therefore,  is real.

is real.

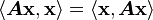

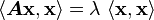

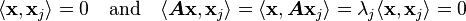

3) If  and

and  are two eigenpairs then

are two eigenpairs then

Since the matrix is self-adjoint, we have

Therefore, if  , we must have

, we must have

Hence the eigenvectors are orthogonal.

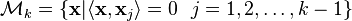

4) This part is a bit more involved. We need to define a manifold first.

Linear manifold

A linear manifold (or vector subspace)  is a subset of

is a subset of  which is

closed under scalar multiplication and vector addition.

which is

closed under scalar multiplication and vector addition.

Examples are a line through the origin of  -dimensional space, a plane through the origin,

the whole space, the zero vector, etc.

-dimensional space, a plane through the origin,

the whole space, the zero vector, etc.

Invariant manifold

An invariant manifold  for the matrix

for the matrix  is the linear manifold for which

is the linear manifold for which

implies

implies  .

.

Examples are the null space and range of a matrix  . For the case of a rotation

about an axis through the origin in

. For the case of a rotation

about an axis through the origin in  -space, invaraiant manifolds are the origin, the

plane perpendicular to the axis, the whole space, and the axis itself.

-space, invaraiant manifolds are the origin, the

plane perpendicular to the axis, the whole space, and the axis itself.

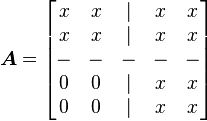

Therefore, if  are a basis for

are a basis for  and

and

are a basis for

are a basis for  (the perpendicular component of

(the perpendicular component of

) then in this basis

) then in this basis  has the representation

has the representation

We need a matrix of this form for it to be in an invariant manifold for  .

.

Note that if  is an invariant manifold of

is an invariant manifold of  it does not follow that

it does not follow that  is also an invariant manifold.

is also an invariant manifold.

Now, if  is self adjoint then the entries in the off-diagonal spots must be zero too.

In that case,

is self adjoint then the entries in the off-diagonal spots must be zero too.

In that case,  is block diagonal in this basis.

is block diagonal in this basis.

Getting back to part (4), we know that there exists at least one eigenpair ( )

(this is true for any matrix). We now use induction. Suppose that we have found (

)

(this is true for any matrix). We now use induction. Suppose that we have found ( )

mutually orthogonal eigenvectors

)

mutually orthogonal eigenvectors  with

with  and

and  are real,

are real,  . Note that the

. Note that the  s are invariant manifolds of

s are invariant manifolds of  as

is the space spanned by the

as

is the space spanned by the  s and so is the manifold perpendicular to these vectors).

s and so is the manifold perpendicular to these vectors).

We form the linear manifold

This is the orthogonal component of the  eigenvectors

eigenvectors  If

If  then

then

Therefore  which means that

which means that  is invariant.

is invariant.

Hence  contains at least one eigenvector

contains at least one eigenvector  with real eigenvalue

with real eigenvalue  .

We can repeat the procedure to get a diagonal matrix in the lower block of the block

diagonal representation of

.

We can repeat the procedure to get a diagonal matrix in the lower block of the block

diagonal representation of  . We then get

. We then get  distinct eigenvectors and so

distinct eigenvectors and so  can

be diagonalized. This implies that the eigenvectors form an orthonormal basis.

can

be diagonalized. This implies that the eigenvectors form an orthonormal basis.

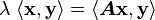

5) This follows from the previous result because each eigenvector can be normalized so

that  .

.

We will explore some more of these ideas in the next lecture.

| | Resource type: this resource contains a lecture or lecture notes. |

| | Action required: please create Category:Applied linear operators and spectral methods/Lectures and add it to Category:Lectures. |