The Linux Kernel/Memory

< The Linux KernelIntroduction

The kernel has full access to the system's memory and must allow processes to safely access this memory as they require it. Often the first step in doing this is virtual addressing, usually achieved by paging and/or segmentation. Virtual addressing allows the kernel to make a given physical address appear to be another address, the virtual address. Virtual address spaces may be different for different processes; the memory that one process accesses at a particular (virtual) address may be different memory from what another process accesses at the same address. This allows every program to behave as if it is the only one (apart from the kernel) running and thus prevents applications from crashing each other.

On many systems, a program's virtual address may refer to data which is not currently in memory. The layer of indirection provided by virtual addressing allows the operating system to use other data stores, like a hard drive, to store what would otherwise have to remain in main memory (RAM). As a result, operating systems can allow programs to use more memory than the system has physically available. When a program needs data which is not currently in RAM, the CPU signals to the kernel that this has happened, and the kernel responds by writing the contents of an inactive memory block to disk (if necessary) and replacing it with the data requested by the program. The program can then be resumed from the point where it was stopped. This scheme is generally known as demand paging.

Virtual addressing also allows creation of virtual partitions of memory in two disjointed areas, one being reserved for the kernel (kernel space) and the other for the applications (user space). The applications are not permitted by the processor to address kernel memory, thus preventing an application from damaging the running kernel. This fundamental partition of memory space has contributed much to the current designs of actual general-purpose kernels and is almost universal in such systems, being Linux one of them.

Process Memory Layout

A 32-bit processor can address a maximum of 4GB of memory. Linux kernels split the 4GB address space between user processes and the kernel; under the most common configuration, the first 3GB of the 32-bit range are given over to user space, and the kernel gets the final 1GB starting at 0xc0000000. Sharing the address space gives a number of performance benefits; in particular, the hardware's address translation buffer can be shared between the kernel and user space.

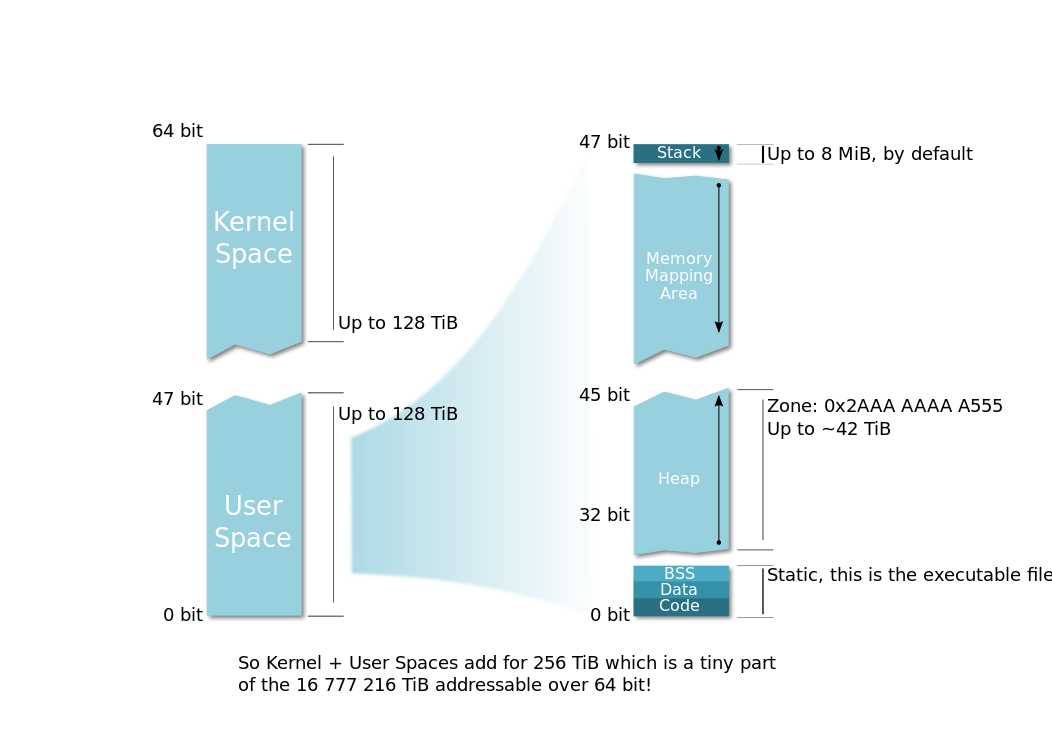

In x86-64, only the least significant 48 bits of a virtual memory address would actually be used in address translation (page table lookup). The remainder bits 48 through 63 of any virtual address must be copies of bit 47, or the processor will raise an exception. Addresses complying with this rule are referred to as "canonical form." Canonical form addresses run from 0 through 00007FFF'FFFFFFFF, and from FFFF8000'00000000 through FFFFFFFF'FFFFFFFF, for a total of 256 TB of usable virtual address space. This is still approximately 64,000 times the virtual address space on 32-bit machines.

Linux takes the higher-addressed half of the address space for itself (kernel space) and leaves the lower-addressed half for user space. The "canonical address" design has, in effect, two memory halves: the lower half starts at 00000000'00000000 and "grows upwards" as more virtual address bits become available, while the higher half is "docked" to the top of the address space and grows downwards.

Memory management Linux API

Linux inherits its two basic system calls related to memory management from Unix: brk() and mmap().

- brk() and sbrk() are used to control the amount of memory allocated to the data segment of the calling process by dynamically change its program break. The program break is the address of the first location beyond the current end of the data region, and determines the maximum space that can be allocated by the process. The amount of available space increases as the break value increases. The added available space is initialized to a value of zero.

- mmap() maps files or devices into memory. It is a method of memory-mapped file I/O. It naturally implements demand paging, because file contents are not read from disk initially and do not use physical RAM at all. The actual reads from disk are performed in a "lazy" manner, after a specific location is accessed. After the memory is no longer needed it is important to munmap() the pointers to it. Protection information can be managed using mprotect() and special treatment can be enforced using madvise(). In Linux, mmap() can create several types of mappings, such as anonymous mappings, shared mappings and private mappings. Using the

MAP_ANONYMOUSflag mmap() can map a specific area of the process's virtual memory not backed by any file, whose contents are initialized to zero.

These functions are typically called from a higher-level memory management library function such as C standard library malloc() or C++ new operator.

User space API

#include <unistd.h>

int brk(void *end_data_segment);

The brk() subroutine sets the program break value to the value of the end_data_segment parameter and changes the amount of available space accordingly.

Upon successful completion, the brk() subroutine returns a value of 0. If it is unsuccessful, a value of −1 is returned and the errno global variable is set to indicate the error.

#include <unistd.h>

void *sbrk(intptr_t increment);

The sbrk() subroutine adds to the program break value the number of bytes contained in the increment parameter and changes the amount of available space accordingly. The increment parameter can be a negative number, in which case the amount of available space is decreased.

Upon successful completion, the sbrk() subroutine returns the prior value of the program break (if the available space is increased the return value points to the start of the new area). If it is unsuccessful, a value of −1 is returned and the errno global variable is set to indicate the error.

Linux system calls

The system call prototypes can be found in include/linux/syscalls.h header file:

asmlinkage long sys_brk(unsigned long brk);

asmlinkage long sys_mmap_pgoff(unsigned long addr, unsigned long len,

unsigned long prot, unsigned long flags,

unsigned long fd, unsigned long pgoff);

On Linux, sbrk() is not a separate system call, but a C library function that also calls to sys_brk() and keeps some internal state to return the previous break value.

Kernel Memory Allocation

kmalloc()

kmalloc() is the normal method of allocating memory in the kernel for objects smaller than the page size. It is defined in the include/linux/slab.h header file:

#include <linux/slab.h>

void *kmalloc(size_t size, int flags);

The first argument size is the size (in bytes) of the block of memory to be allocated. The second argument flags are the allocation flags or GFP flags, a set of macros that the caller provides to control the type of requested memory. The most commonly used values for flags are GFP_KERNEL and GFP_ATOMIC, but therer is more to be considered.

GFP flags

Memory-allocation requests in the kernel are always qualified by a set of GFP flags ("GFP" initially came from "get free page") describing what can and cannot be done to satisfy the request. The most commonly used flags are GFP_ATOMIC and GFP_KERNEL, though they are actually built up from lower-level flags. The full set of flags is huge; they can be found in the include/linux/gfp.h header file.

Slab Allocator

Slab allocation is a memory management mechanism intended for the efficient memory allocation of kernel objects. It eliminates fragmentation caused by allocations and deallocations. The technique is used to retain allocated memory that contains a data object of a certain type for reuse upon subsequent allocations of objects of the same type. Linux used a Slab allocation as the default allocator since kernel 2.2 until 2.6.23, when SLUB allocation became the default, but it is still available as an option.

Basics

A slab can be thought of as an array of objects of certain type or with the same size, spanning through one or more contiguous pages of memory; for example, the slab named "task_struct" holds objects of struct task_struct type, used by the scheduling subsystem. Other slabs store objects used by other subsystems, and there is also slabs for dynamic allocations inside the kernel, such as the "kmalloc-64" slab that holds up to 64-byte chunks requested via kmalloc() calls. In a slab, each object can be allocated and freed separately.

The primary motivation for slab allocation is that the initialization and destruction of kernel data objects can actually outweigh the cost of allocating memory for them. As object creation and deletion are widely employed by the kernel, overhead costs of initialization can result in significant performance drops. The notion of object caching was therefore introduced in order to avoid the invocation of functions used to initialize object state.

With slab allocation, memory chunks suitable to fit data objects of certain type or size are preallocated. The slab allocator keeps track of these chunks, known as caches, so that when a request to allocate memory for a data object of a certain type is received, it can instantly satisfy the request with an already allocated slot. Destruction of the object does not free up the memory, but only opens a slot which is put in the list of free slots by the slab allocator. The next call to allocate memory of the same size will return the now unused memory slot. This process eliminates the need to search for suitable memory space and greatly alleviates memory fragmentation. In this context, a slab is one or more contiguous pages in the memory containing pre-allocated memory chunks.

Slab allocation provides a kind of front-end to the zoned buddy allocator for those sections of the kernel that require more flexible memory allocation than the standard 4KB page size.

SLAB allocator

SLAB is the name given to the first slab allocator implemented in the kernel to distinguish it from later allocators that use the same interface. It's heavily based on Jeff Bonwick's paper "The Slab Allocator: An Object-Caching Kernel Memory Allocator" (1994) describing the first slab allocator implemented in the Solaris 5.4 kernel.

SLUB allocator

SLUB (acronym of "the unqueued slab allocator") is the iteration of the slab allocator that replaced it and became the Linux default allocator since 2.6.23.

SLOB allocator

Unfortunately, SLAB and SLUB allocators consume a big amount of memory allocating their slabs, which is a serious drawback in small systems with memory constraints, such as embedded systems. To overcome it, the SLOB (Simple List Of Blocks) allocator was designed in January 2006 by Matt Mackall as a simpler method to allocate kernel objects.

SLOB allocator uses a first-fit algorithm, which chooses the first available space for memory. This algorithm reduces memory comsumption, but a major limitation of this method is that it suffers greatly from internal fragmentation.

The SLOB allocator is also used as a fall back by the kernel build system when no slab allocator is defined (when the CONFIG_SLAB flag is disabled).

The SLOB allocator implementation lives in the source file Linux/mm/slob.c.

Page Allocation

The page allocator (or "buddy allocator") is a low-level allocator that deals with physical memory. It delivers physical pages (usually with a size of 4096 bytes) of free memory to high-level memory consumers such as the slab allocators and kmalloc(). As the ultimate source of memory in the system, the page allocator must ensure that memory is always available, since a failure providing memory to a critical kernel subsystem can lead to a general system failure or a kernel panic.

The page allocator divides physical memory into "zones," each of which corresponds to memory with specific characteristics. ZONE_DMA contains memory at the bottom of the address range for use by severely challenged devices, for example, while ZONE_NORMAL may contain most memory on the system. 32-bit systems have a ZONE_HIGHMEM for memory that is not directly mapped into the kernel's address space. Depending on the characteristics of any given allocation request, the page allocator will search the available zones in a specific priority order. For the curious, /proc/zoneinfo gives a lot of information about the zones in use on any given system.

Within a zone, memory is grouped into page blocks, each of which can be marked with a migration type describing how the block should be allocated.

Memory addressing

Pages

In Linux, different architectures have different page sizes. The original —for i386 architecture— and still most commonly used page size is 4096 bytes (4 KB). The page size (in bytes) of the current architecture is defined by the PAGE_SIZE macro included in <asm/page.h> header file. User space programs can get this value using the getpagesize() library function. Another related macro is PAGE_SHIFT, that contains the number of bits to shift an address to get its page number —12 bits for 4K pages.

One of the most fundamental kernel data structures relating memory-management is struct page. The kernel keeps track of the status of every page of physical memory present in the system using variables of this type. There are millions of pages in a modern system, and therefore there are millions of these structures in memory.

The full definition of struct page can be found in <linux/mm_types.h>.