Engineering Analysis/Linear Independence and Basis

< Engineering AnalysisLinear Independance

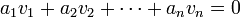

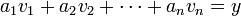

A set of vectors  are said to be linearly dependant on one another if any vector v from the set can be constructed from a linear combination of the other vectors in the set. Given the following linear equation:

are said to be linearly dependant on one another if any vector v from the set can be constructed from a linear combination of the other vectors in the set. Given the following linear equation:

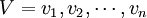

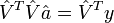

The set of vectors V is linearly independent only if all the a coefficients are zero. If we combine the v vectors together into a single row vector:

And we combine all the a coefficients into a single column vector:

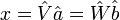

We have the following linear equation:

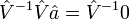

We can show that this equation can only be satisifed for  , the matrix

, the matrix  must be invertable:

must be invertable:

Remember that for the matrix to be invertable, the determinate must be non-zero.

Non-Square Matrix V

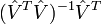

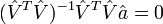

If the matrix  is not square, then the determinate can not be taken, and therefore the matrix is not invertable. To solve this problem, we can premultiply by the transpose matrix:

is not square, then the determinate can not be taken, and therefore the matrix is not invertable. To solve this problem, we can premultiply by the transpose matrix:

And then the square matrix  must be invertable:

must be invertable:

Rank

The rank of a matrix is the largest number of linearly independent rows or columns in the matrix.

To determine the Rank, typically the matrix is reduced to row-echelon form. From the reduced form, the number of non-zero rows, or the number of non-zero columns (whichever is smaller) is the rank of the matrix.

If we multiply two matrices A and B, and the result is C:

Then the rank of C is the minimum value between the ranks A and B:

Span

A Span of a set of vectors V is the set of all vectors that can be created by a linear combination of the vectors.

Basis

A basis is a set of linearly-independent vectors that span the entire vector space.

Basis Expansion

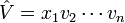

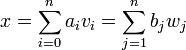

If we have a vector  , and V has basis vectors

, and V has basis vectors  , by definition, we can write y in terms of a linear combination of the basis vectors:

, by definition, we can write y in terms of a linear combination of the basis vectors:

or

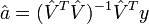

If  is invertable, the answer is apparent, but if

is invertable, the answer is apparent, but if  is not invertable, then we can perform the following technique:

is not invertable, then we can perform the following technique:

And we call the quantity  the left-pseudoinverse of

the left-pseudoinverse of  .

.

Change of Basis

Frequently, it is useful to change the basis vectors to a different set of vectors that span the set, but have different properties. If we have a space V, with basis vectors  and a vector in V called x, we can use the new basis vectors

and a vector in V called x, we can use the new basis vectors  to represent x:

to represent x:

or,

If V is invertable, then the solution to this problem is simple.

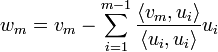

Grahm-Schmidt Orthogonalization

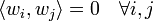

If we have a set of basis vectors that are not orthogonal, we can use a process known as orthogonalization to produce a new set of basis vectors for the same space that are orthogonal:

- Given:

- Find the new basis

- Such that

We can define the vectors as follows:

Notice that the vectors produced by this technique are orthogonal to each other, but they are not necessarily orthonormal. To make the w vectors orthonormal, you must divide each one by its norm:

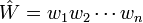

Reciprocal Basis

A Reciprocal basis is a special type of basis that is related to the original basis. The reciprocal basis  can be defined as:

can be defined as:

![\hat{V} = [v_1 v_2 \cdots v_n]](../I/m/47ef0b94838eaa5cfe026bf930bf92c7.png)

![\hat{a} = [a_1 a_2 \cdots a_n]^T](../I/m/9b7be9cc18dfc2d8beed7e7e18fa8416.png)

![\operatorname{Rank}(C) = \operatorname{min}[\operatorname{Rank}(A), \operatorname{Rank}(B)]](../I/m/7596f6a939cba16f5a52872894425fc0.png)

![\hat{W} = [\hat{V}^T]^{-1}](../I/m/c5e35fcf854f28d91e9c92e5b9aa8c68.png)